If you’re like me, you probably have a serious issue with digital hoarding, refuse to delete anything and methodically collect and categorize all the things.

We need help, yes.

In the meantime, there’s the option of substituting your carefully hand-encoded AVC video files and give them the ol’ modernization treatment with our new best friend, HEVC.

If you’re confused at this point, here’s a short glossary:

- AVC: Advanced Video Codec (or x264 for the specific encoder): The video codec used in pretty much all modern video.

- HEVC: High Efficiency Video Coding (or x265 for the specific encoder): The new(er) kid on the block and successor to AVC.

Your standard run-of-the-mill video file that obviously fell from a truck will usually come in either an mp4 or mkv container with AVC video and some kind of audio.

If you want to reduce the file size of the video and keep the quality reasonably high, re-encoding the file into HEVC seems to be a valid plan. Obviously it would be technically better to rip the source medium again into HEVC directly instead of transcoding an existing lossily-encoded file, but… yeah, that would be more work and less bashscriptable.

On a side note, if you want a little primer on video compression, I heartily recommend Tom Scott’s video on a related issue.

Anyway, long story short:

so I took the first episode of Farscape, which I had conveniently lying around in a neat little matroska-packaged AVC+DTS combo, cut out 15 minutes, and then re-encoded this 15 minute clip in a bunch of ways.

Why? Well, initially I wanted to figure out how best to transcode my existing Farscape rips to save the most space while maintaining a reasonable amount of quality, so I did the scientific(ish) thing and created a bunch of samples.

And yes, HEVC encoding without proper hardware support is a pain and I spent way too much CPU time on this little project, but soon™ we will have reached the point were HEVC is the new de-facto standard and when that point comes I will be ready.

Methodology i.e. “stuff I did” Link to heading

Look, I’m not an expert on video encoding, I’m not familiar with the internals of the encoders, which means I know shit about the standards and the software implementations. I’m just some guy who wanted to save disk space and decided to do some testing in the process.

I re-encoded the aforementioned video clip using the following settings:

- Codecs: x264 and x265

- CRF: 1, 17, 18, 20, 21, 25, 26, 51

- Presets: placebo, slower, medium, veryfast, ultrafast

The result is 80 files of varying quality and size.

Judging file size is pretty straight-forward: Just compare the file sizes. Magic.

As for quality, that’s a difficult one, and since I lack a proper testing setup and about three dozen people to judge the subjective quality of each clip, I’ll just be using the SSIM as calculated by comparing each clip with the original clip, and see how far that gets me.

So to start, here are the first five rows:

| file | codec | preset | CRF | size | ssim |

|---|---|---|---|---|---|

| farscape_sample_x264.AAC2.0.CRF01-medium.mkv | x264 | medium | 1 | 2097.20 | 0.935785 |

| farscape_sample_x264.AAC2.0.CRF01-placebo.mkv | x264 | placebo | 1 | 1484.04 | 0.935858 |

| farscape_sample_x264.AAC2.0.CRF01-slower.mkv | x264 | slower | 1 | 1530.13 | 0.935846 |

| farscape_sample_x264.AAC2.0.CRF01-ultrafast.mkv | x264 | ultrafast | 1 | 3887.30 | 0.935909 |

| farscape_sample_x264.AAC2.0.CRF01-veryfast.mkv | x264 | veryfast | 1 | 2315.65 | 0.935663 |

File Size Link to heading

To start of, here’s some tables:

| CRF | codec | placebo | slower | medium | veryfast | ultrafast |

|---|---|---|---|---|---|---|

| 1 | x264 | 1484.04 | 1530.13 | 2097.2 | 2315.65 | 3887.3 |

| x265 | 1852.19 | 1725.59 | 1796.31 | 1868.92 | 1279.54 | |

| 17 | x264 | 259.2 | 271.1 | 280.49 | 253.99 | 545.81 |

| x265 | 180.46 | 176.28 | 172.7 | 157.22 | 120.96 | |

| 18 | x264 | 222.7 | 233.1 | 242.13 | 216.36 | 481.01 |

| x265 | 155.92 | 153.02 | 150.45 | 138.04 | 108.97 | |

| 20 | x264 | 169 | 175.89 | 184.06 | 160.7 | 372.08 |

| x265 | 119.82 | 118.64 | 117.38 | 108.76 | 89.14 | |

| 21 | x264 | 149.09 | 154.72 | 162.14 | 140.05 | 328.22 |

| x265 | 106.38 | 105.71 | 104.65 | 97.42 | 80.92 | |

| 25 | x264 | 97.14 | 98.39 | 102.9 | 87.7 | 199.32 |

| x265 | 70.44 | 70.63 | 70.62 | 66.04 | 57.58 | |

| 26 | x264 | 88.53 | 89.2 | 93.12 | 79.28 | 176.59 |

| x265 | 64.4 | 64.65 | 64.74 | 60.57 | 53.38 | |

| 51 | x264 | 24.5 | 24.05 | 23.77 | 23.38 | 27.59 |

| x265 | 21.6 | 21.61 | 22.56 | 21.44 | 22 |

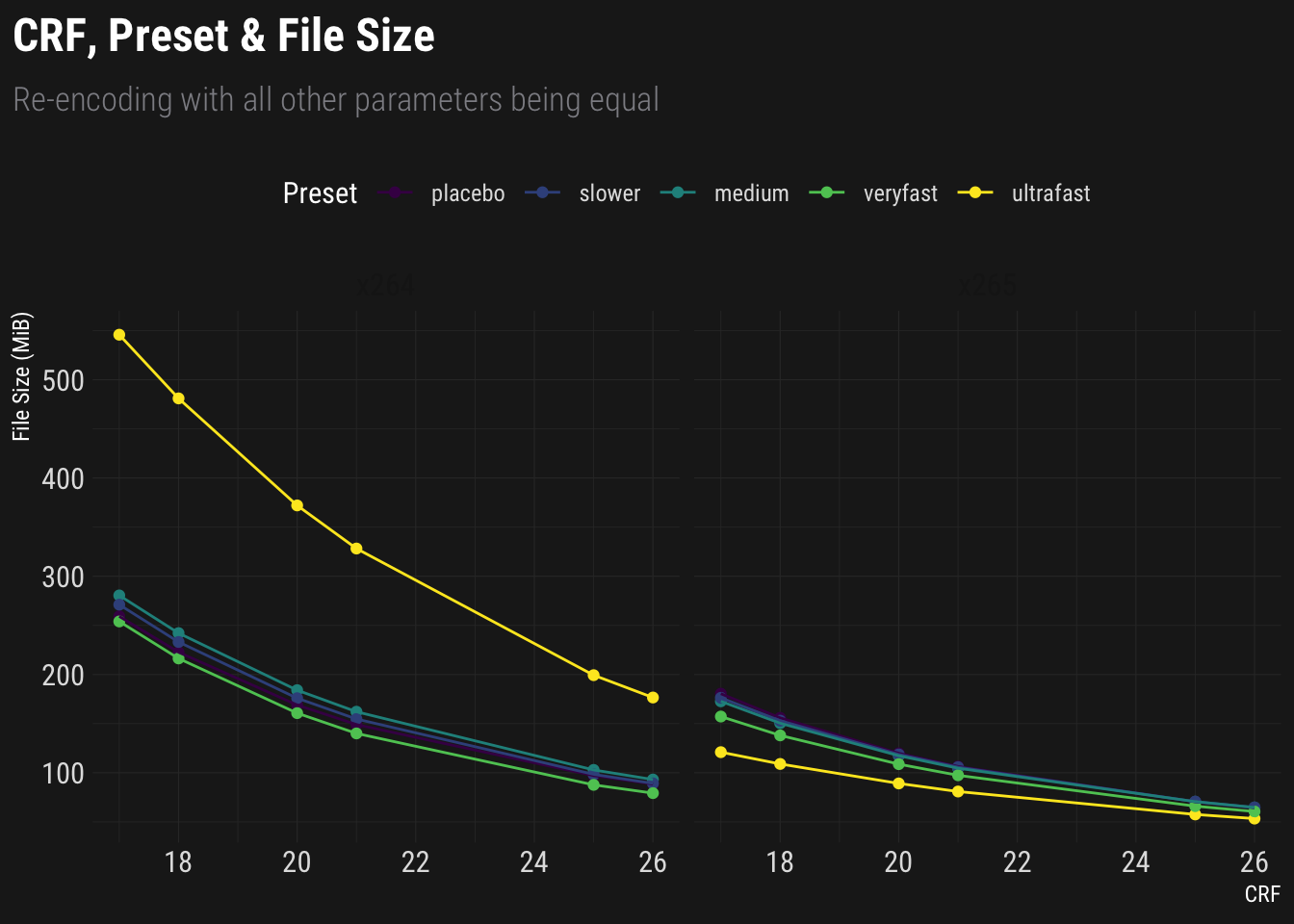

That’s… accurate, yet not very visually stimulating.

Needs more plot.

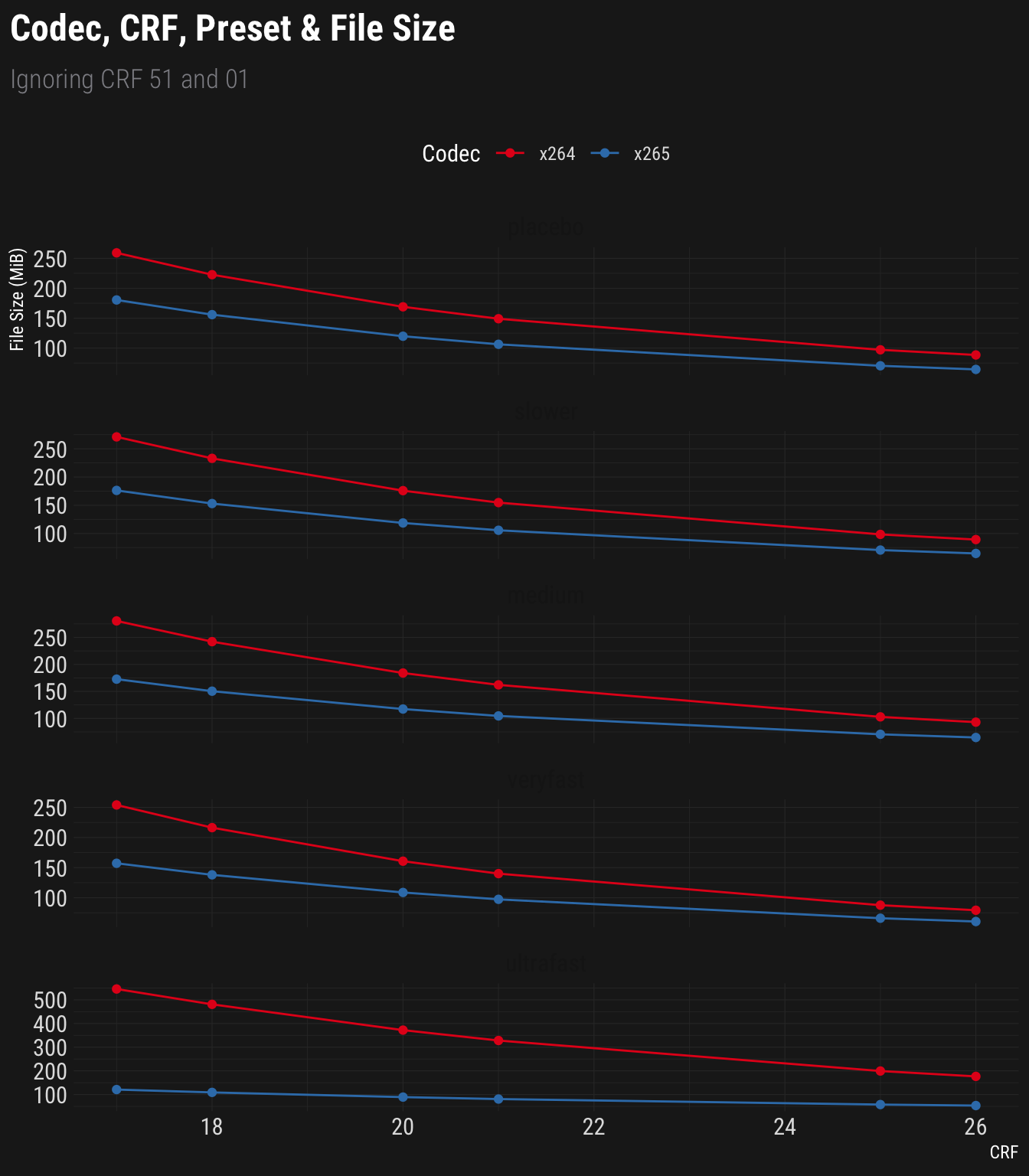

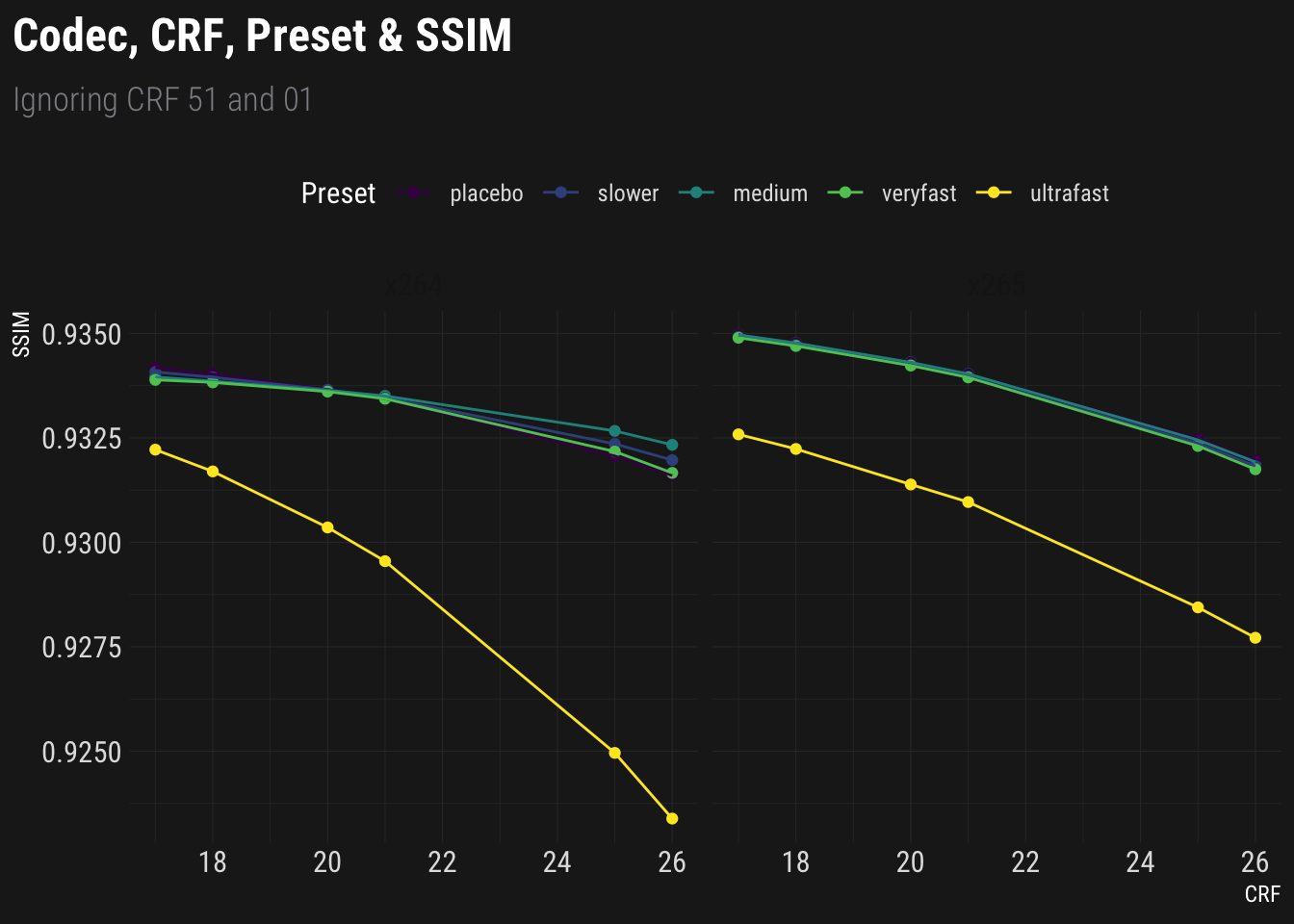

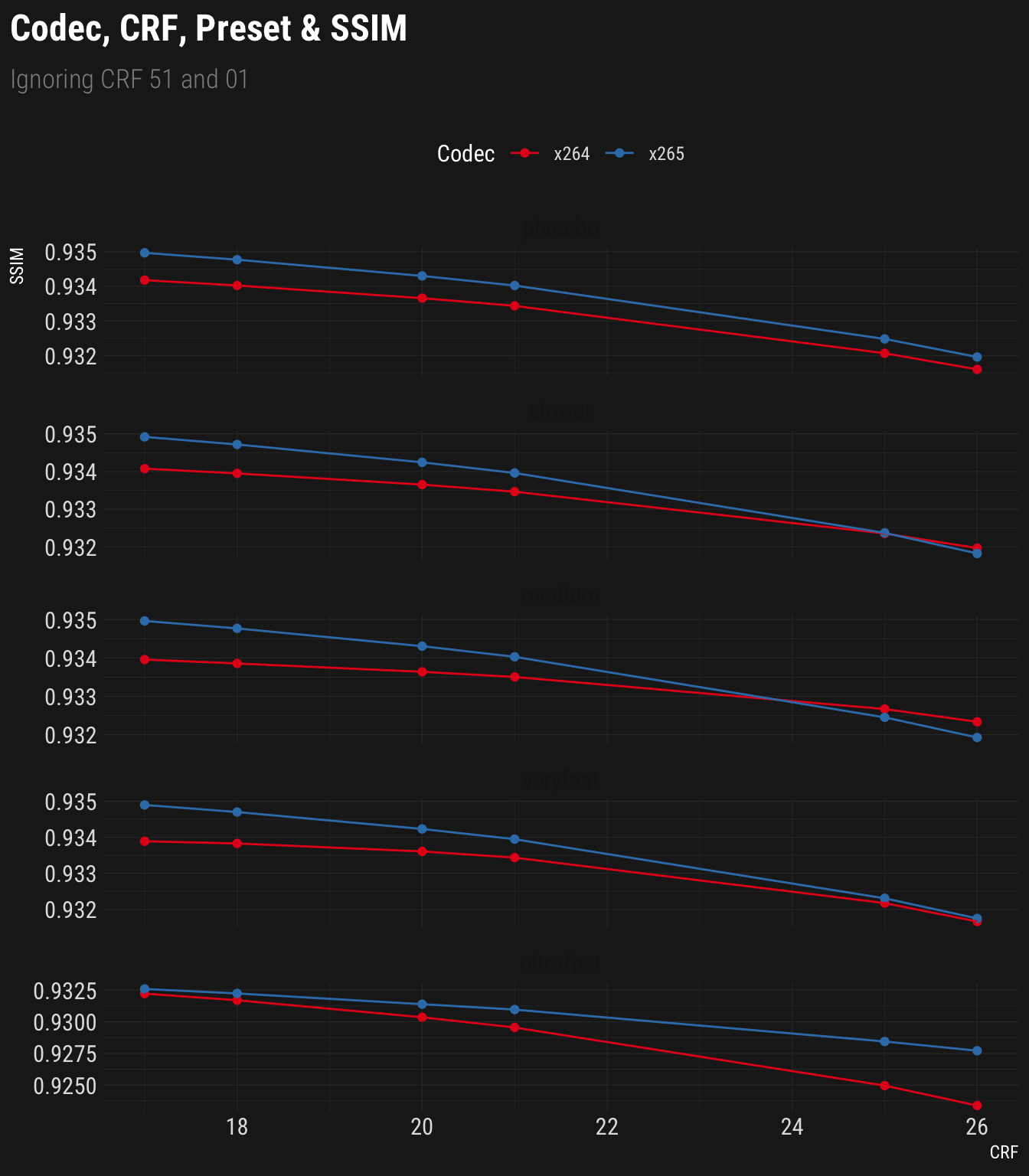

Okay, let’s zoom in a little by ignoring CRF 51 and CRF 01, as they’re silly anyway.

Hm, yes, quite.

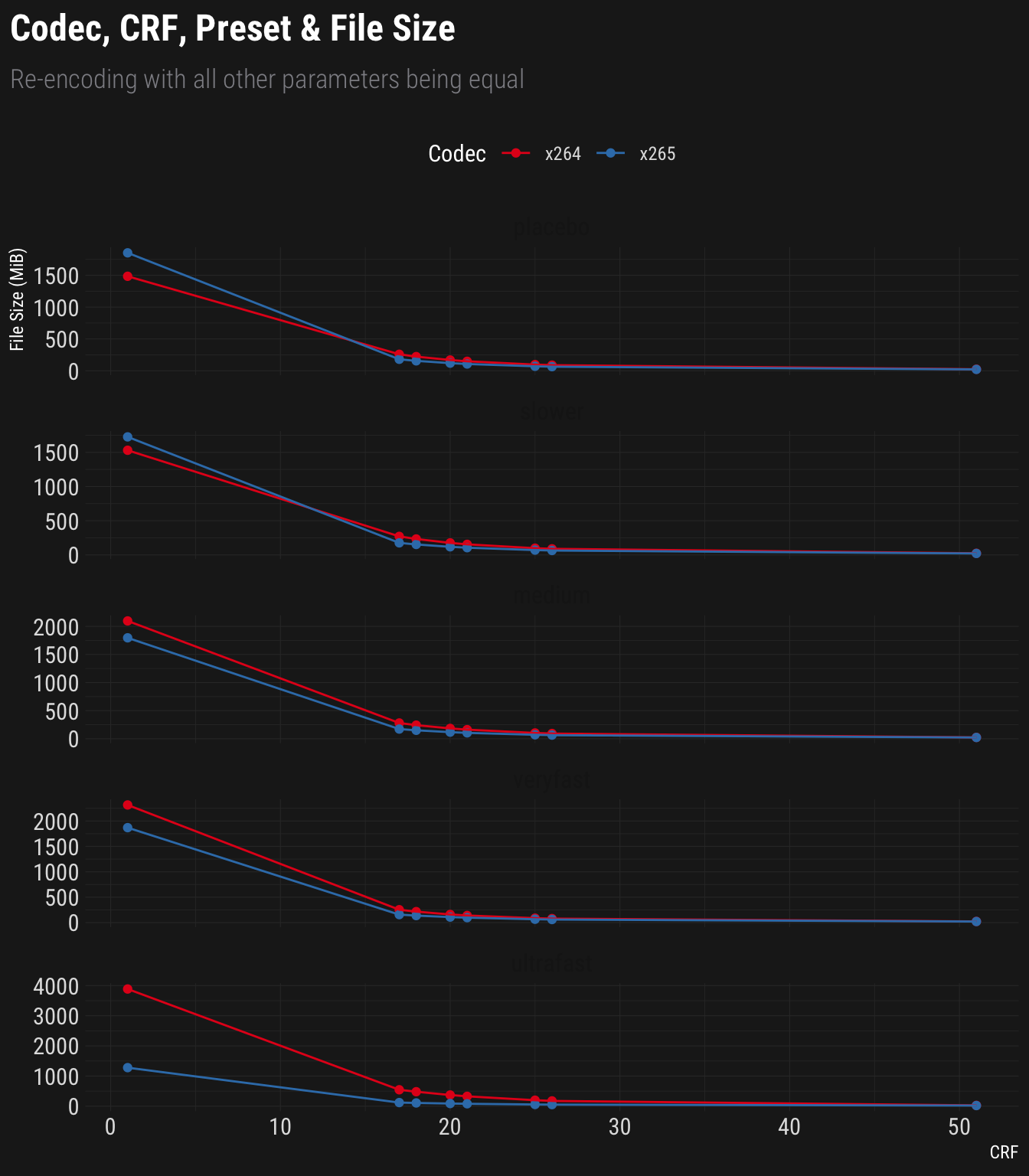

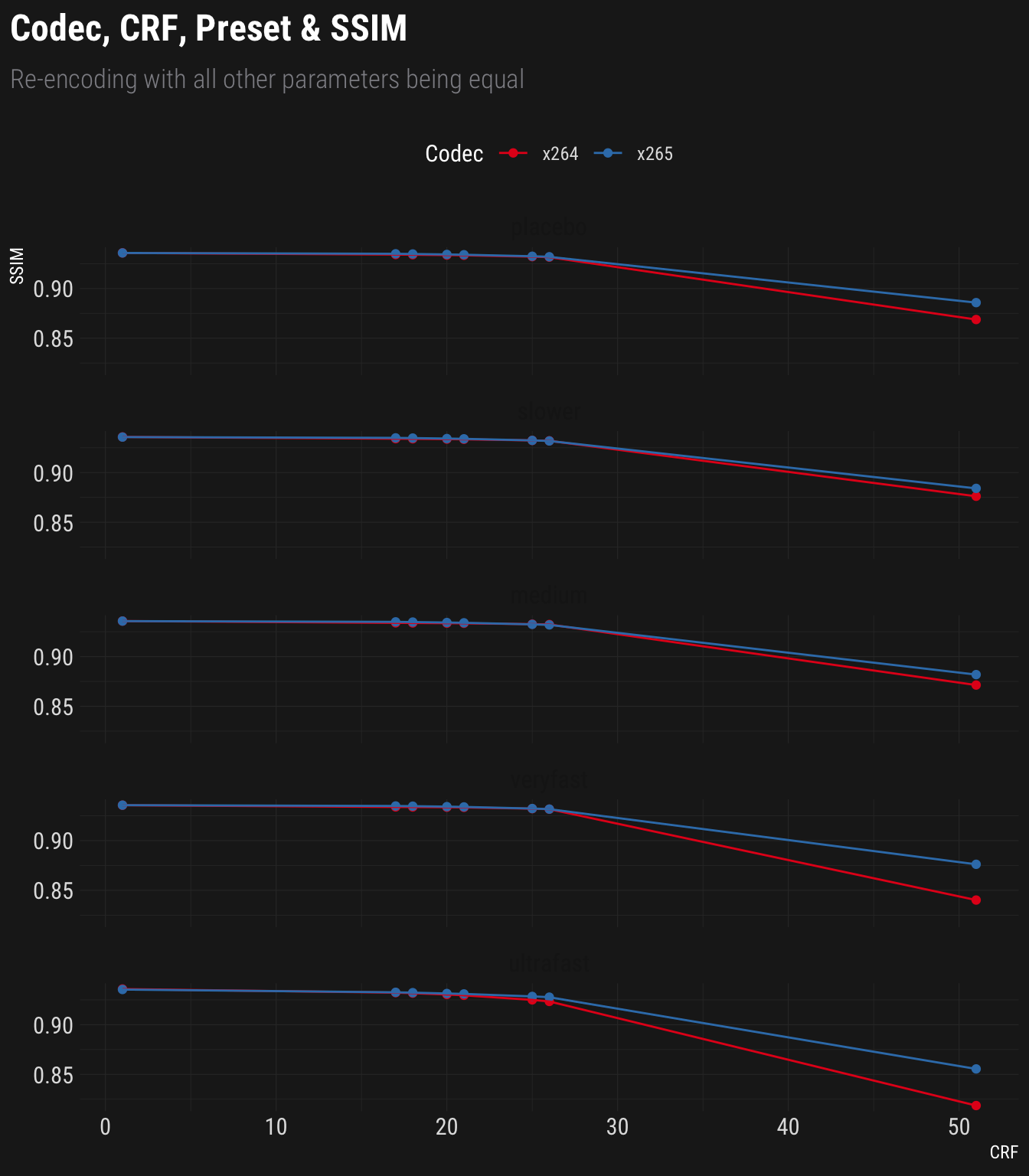

Now a breakdown to compare codecs across presets:

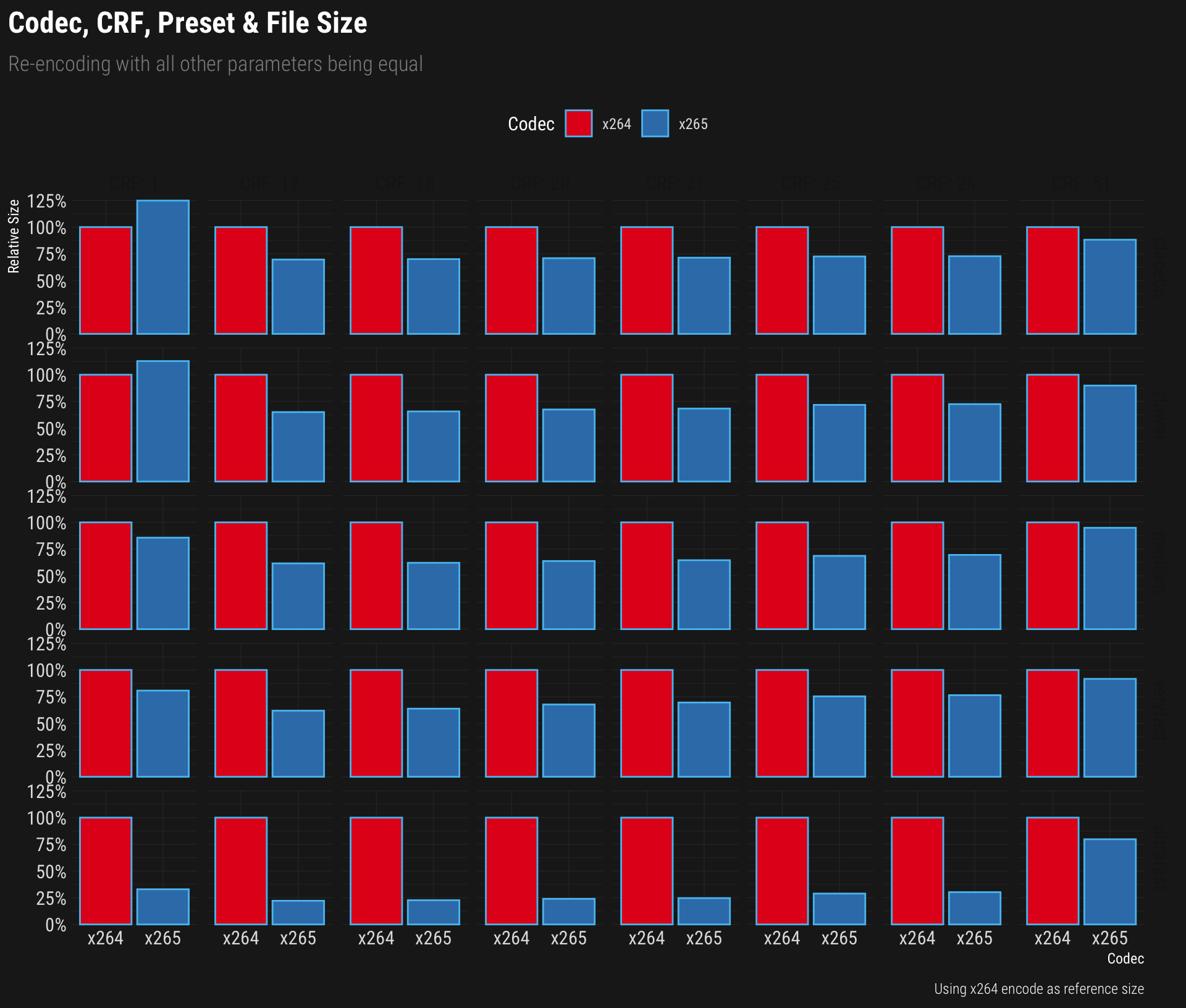

As you might have noticed, absolute file sizes might not be as interesting and/or generalizable as relative size changes, so here we go:

SSIM (Approximate Quality) Link to heading

To start, let’s do the raw data table thing again:

| CRF | codec | placebo | slower | medium | veryfast | ultrafast |

|---|---|---|---|---|---|---|

| 1 | x264 | 93.6 | 93.6 | 93.6 | 93.6 | 93.6 |

| x265 | 93.6 | 93.6 | 93.6 | 93.6 | 93.5 | |

| 17 | x264 | 93.4 | 93.4 | 93.4 | 93.4 | 93.2 |

| x265 | 93.5 | 93.5 | 93.5 | 93.5 | 93.3 | |

| 18 | x264 | 93.4 | 93.4 | 93.4 | 93.4 | 93.2 |

| x265 | 93.5 | 93.5 | 93.5 | 93.5 | 93.2 | |

| 20 | x264 | 93.4 | 93.4 | 93.4 | 93.4 | 93 |

| x265 | 93.4 | 93.4 | 93.4 | 93.4 | 93.1 | |

| 21 | x264 | 93.3 | 93.3 | 93.4 | 93.3 | 93 |

| x265 | 93.4 | 93.4 | 93.4 | 93.4 | 93.1 | |

| 25 | x264 | 93.2 | 93.2 | 93.3 | 93.2 | 92.5 |

| x265 | 93.2 | 93.2 | 93.2 | 93.2 | 92.8 | |

| 26 | x264 | 93.2 | 93.2 | 93.2 | 93.2 | 92.3 |

| x265 | 93.2 | 93.2 | 93.2 | 93.2 | 92.8 | |

| 51 | x264 | 86.9 | 87.6 | 87.1 | 84 | 81.9 |

| x265 | 88.6 | 88.4 | 88.2 | 87.6 | 85.5 |

Please note that I had to multiply the SSIM values by 100 to get them to display as something other than a flat 1 because rounding is hard, apparently.

Also, yes I know the “sum” column/row doesn’t make sense, but it’s the default and I couldn’t be bothered to try to remove it.

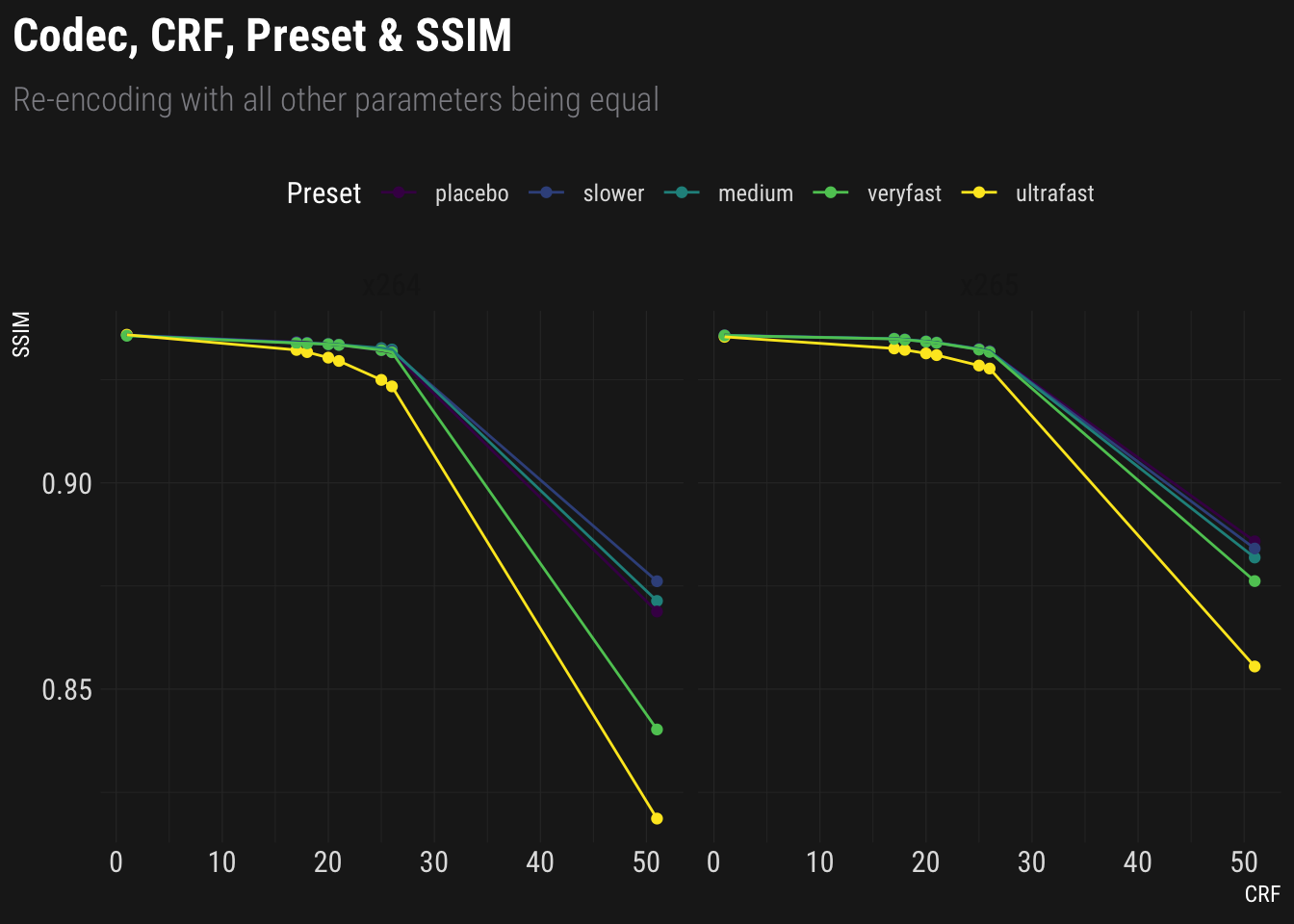

And now, the plotty thing.

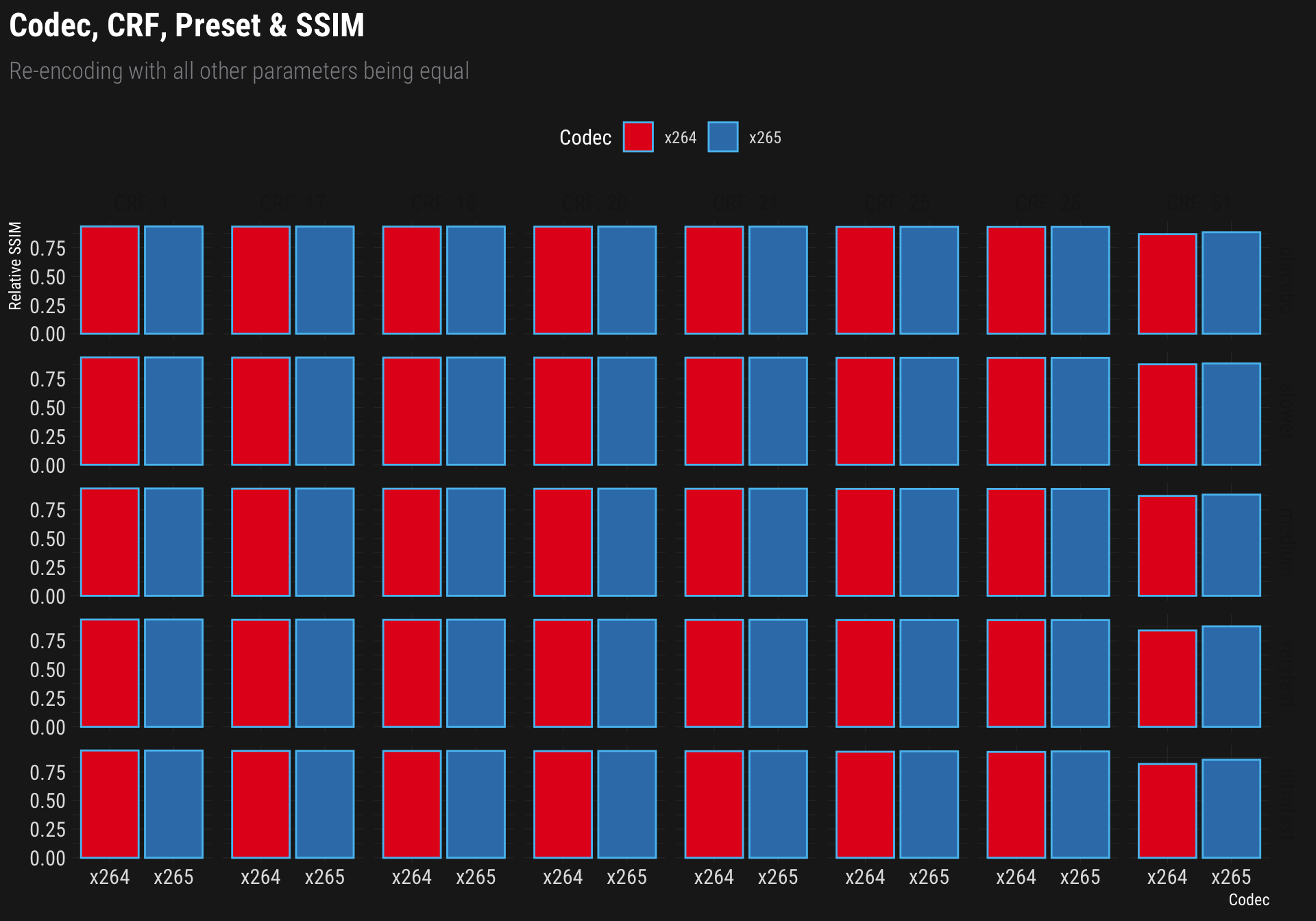

Now let’s do that thing again where we compare all the CRF by preset cells in a grid, but now using SSIM as a metric:

Well that’s not very enlightening, is it?

Bummer.

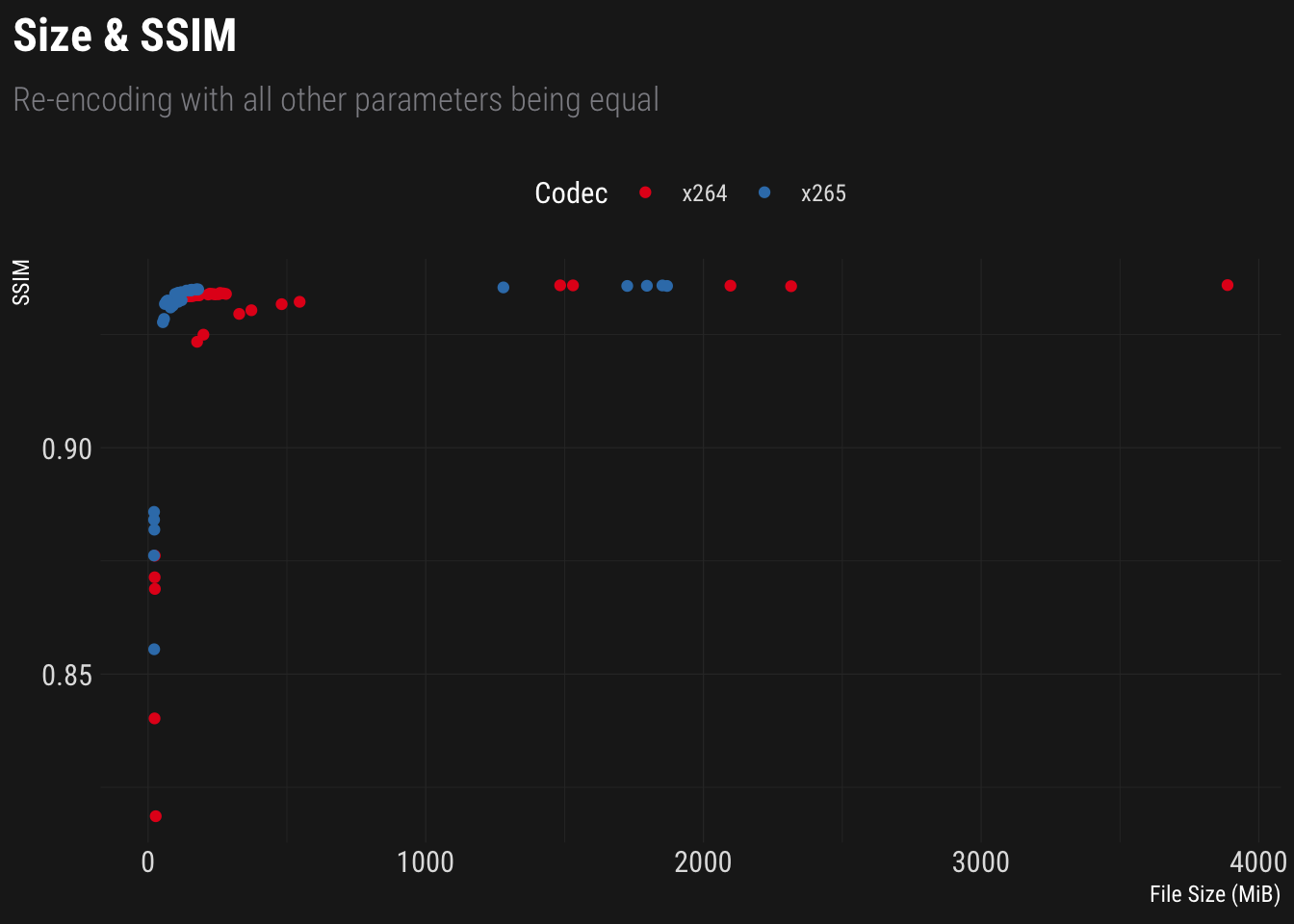

Quality(ish) versus Size Link to heading

I’ve tried log scales on this one, but it didn’t really help.

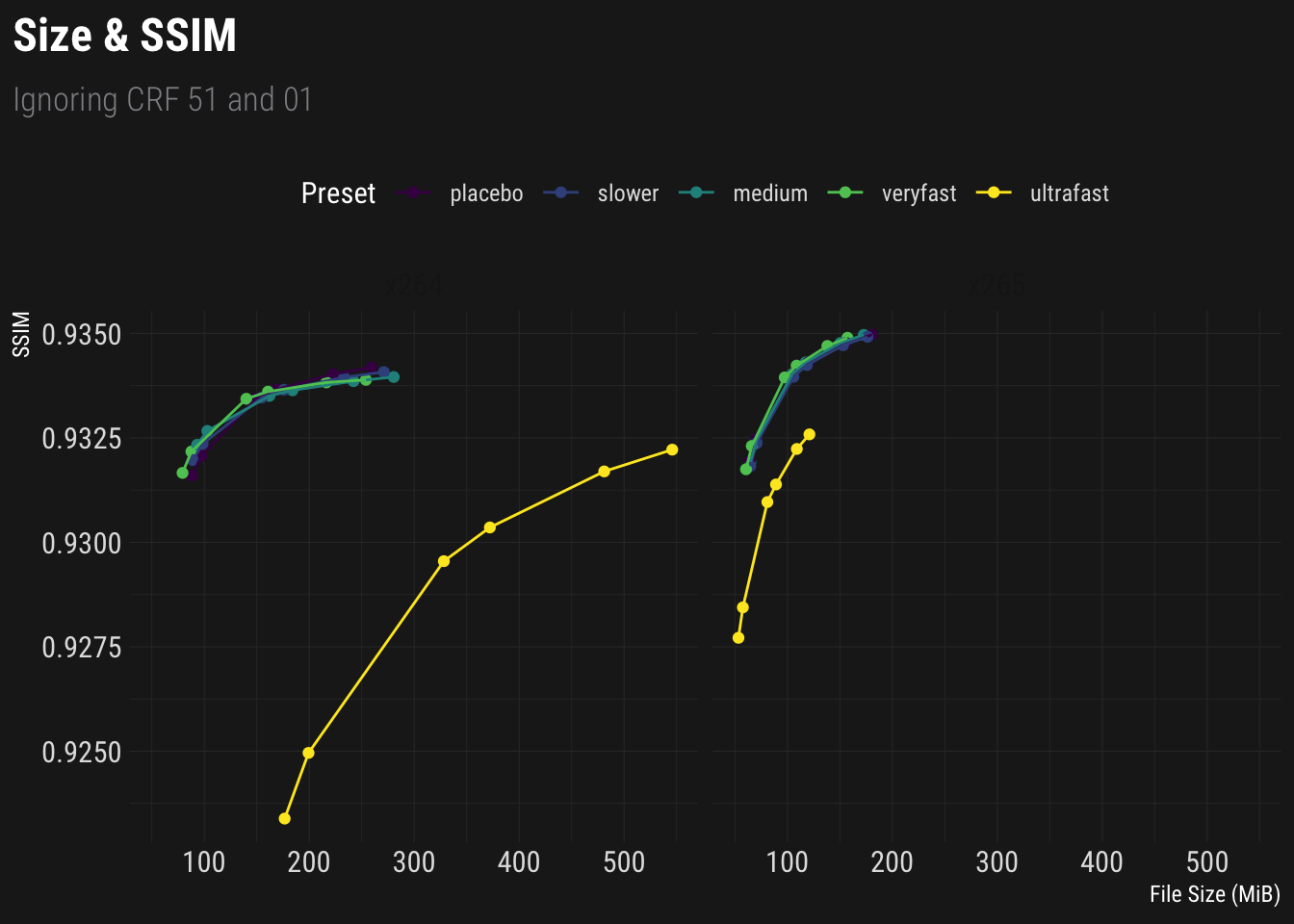

Let’s look at the subset of reasonable CRFs:

Well if there’s a lesson here, it seems that ultrafast is probably not the way to go.

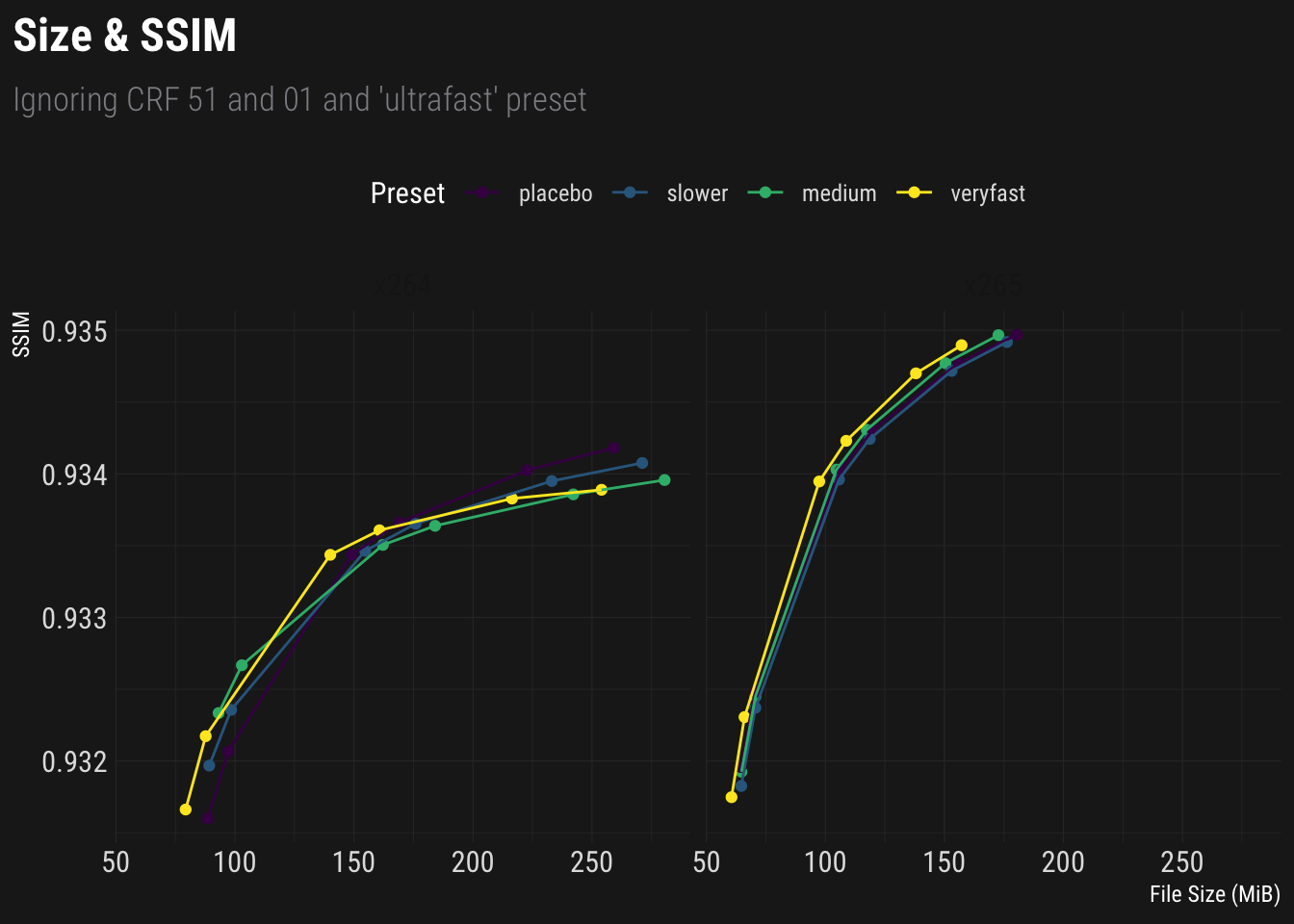

Let’s take another look, ignoring the ultrafast data.

Conclusion Link to heading

Keep in mind that this is not a scientific study.

The results might be limited to my version of HandBrake (1.0.7 (2017040900)), or it might be limited to re-encoding a lossily-encoded file, or it might be limited to encoding SD content and behave slightly differently with 4k content. My point is: I don’t know. I have no idea how generalizable these results are, but with the limited amount of certainty I can muster, I’ll give you this:

- Don’t use ultrafast. veryfast is fast as well, and apparently better(ish)

- Also, don’t use placebo. Why would you even do that to yourself1.

- Keep your CRF around the 20’s. Seems reasonable.

¯\_(ツ)_/¯

Note: If you have anything else you want to try with the data, you can grab it here.

-

If I do this again, I will track the encoding time. Seriously, don’t to placebo. ↩︎